- Published 23 Apr 2023

- Last Modified 29 Aug 2023

- 7 min

What is a DVI Cable?

A bridge between the world of VGA and HDMI - DVI cables had their place in history at the turn of the millennium.

A DVI cable is used to transfer a digital video signal from a device like a computer or DVD player to a display device such as a monitor, TV or projector. “DVI” stands for Digital Visual Interface. Adapters can often be used to connect DVI devices with non-DVI devices.

What is the DVI Interface?

DVI was the result of a concerted effort to standardise digital video transfer around 1999. This was a time when DVD players were the most popular form of home video entertainment, with VHS in terminal decline. Also, home computing was becoming more normalised thanks to Microsoft and Apple, and flat-screen LCD TVs were beginning to outsell traditional cathode ray tube TVs and monitors. Blu-ray was still a few years away, and it would be almost a decade before the likes of Netflix would be streaming high-quality video over the internet.

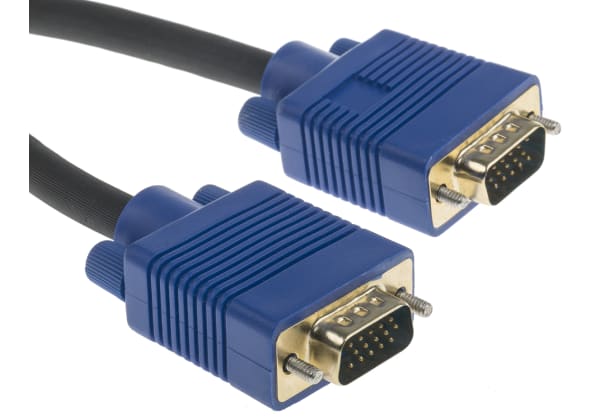

DVI was certainly a step up from the prevailing means of connecting computers to monitors – VGA (Video Graphics Array). By any standards, VGA had good innings, being the go-to video connector from its invention in 1987 to the start of the millennium. If you’re familiar with computers from the Windows 95 era, you’ll recognise the outputs and cables, with their 15 pins and miniature bolts to hold them in place.

DVI Cable vs VGA

Technology and customer demands would eventually spell the end for VGA, however. While the technology was designed to work up to a maximum of 640×480 pixels, it could theoretically be pushed to 1080p. But because of the new digital video technologies coming in, manufacturers of graphics cards and other players didn’t really produce hardware to meet these needs in the analogue world.

VGA carries analogue RGBHV signals (red, green, blue, horizontal & vertical sync). DVI came with four analogue pins (RGBH) alongside 24 full digital TMDS pins to carry much more data. The DVI image is uncompressed, and there’s no provision for ignoring transmission when the colour of a pixel block doesn’t change between frames, as is the case with later technologies. That means that every pixel of every frame is sent to the display, which might provide optimum quality (analogous to a raw camera image), but it does limit resolution and/or framerate because of the sheer amount of processing required.

However, in a world that was transitioning between analogue and digital displays, it was just perfect. Nobody wanted to have to change their DVD player and TV, or computer and monitor when they could just change one. With DVI’s ability to mix analogue and digital, consumers and businesses could upgrade hardware at their own pace, item by item.

But DVI’s Achilles’ heel was never the quality of the image sent to the display. It is an excellent system that can easily handle 1920×1200 movies at 60 Hz, which was enough for the TVs and monitors of the day. It was HDMI that led to the demise of DVI. The drawback of both VGA and DVI was that they were image-only systems – sound signals had to be sent via completely different cables. PC owners would need separate speakers or headphones, and signals to TV would either need separate sound cables or, more likely, just use the SCART cables that were still the norm.

With HDMI, just one cable carries high-quality digital video and sound signals. If a laptop had a DVI port (most did from the early 2000s), it was awkward to connect it to a TV to watch DVDs or view digital home movies because the sound would need to be connected separately, usually via the headphone socket – not exactly optimum.

DVI vs HDMI

HDMI (High-Definition Multimedia Interface) came out in 2002, just three years after the introduction of DVI, and the system was adopted widely by manufacturers of media players, computers, TVs, monitors and projectors. They would quickly become the standard (and remains standard to this day, albeit in newer versions).

DVI would of course continue to be used, despite the emergence of HDMI. Laptops are expected to last around five years, and although desktop PCs can easily change graphics cards, it’s an expense that many consider pointless. Plus, there are plenty of occasions where there’s no need to have sound going to the display, such as the typical office setup, where users will use headphones. Just as DVI coexisted with VGA for several years, so DVI and HDMI continued to work together.

Most graphics cards and monitors from 2002 onwards had HDMI and DVI ports (indeed, VGA stubbornly persisted until well into the 2010s). It’s not that new technology will always win straight away, it’s that millions of consumers and businesses are running legacy equipment and they need to be able to connect things together. If you shop around, you can still find mid-range PC graphics cards that have a DVI slot, and they’re great for double-monitoring – you can use that old 1280×1024 as a reference monitor while you use your 1920×1200 as your main screen.

HDMI vs VGA vs DVI

The history of video cables goes all the way back to Composite RCA, the standard TV aerial connector that was introduced in the 1950s (and is still used to this day). Along the way, demands for quality have led to the development of S-Video, SCART, VGA, DVI, and HDMI 1.0–2.1. HDMI is the superior system, applicable to modern 4K TVs. However, the others are still pretty widely used, particularly with legacy systems or where high quality isn’t necessary, such as in simple low-resolution monitors where upgrading to HD or 4K would be a surplus to requirements.

What is a DVI Cable Used for?

A DVI cable is used to connect two DVI ports (for example between a PC and a monitor). Since DVI-I carries both digital and analogue signals, it can be used with an adaptor to connect a DVI port with either a legacy VGA port or a modern HDMI port.

What Does a DVI Cable Look Like?

DVI ports are recognisable from their block of square sockets arranged in a matrix three high, and eight across, although various cables will have different arrangements of pins (see the diagrams below). There’s also a straight or cross-shaped slot on one side. Like VGA, DVI sockets usually come with two threaded nuts, and the cables have bolts attached to secure them in place. VGA connectors have 15 pins and a curved trapezium profile. HDMI sockets are lower profile, with barely visible connectors and no fastening bolts.

A typical DVI port is shown in the image to the right

Types of DVI Cable

There are four DVI cable types: DVI-I, DVI-D, DVI-A and DVI-DL.

DVI-I Cable

DVI Integrated (DVI-I) carries both digital and analogue signals, and is compatible with VGA and HDMI, using an adapter.

DVI-D Cable

DVI Digital (DVI-I) carries only digital signals and is compatible with HDMI.

DVI-A Cable

DVI Analogue (DVI-A) is analogue only and is compatible with VGA.

Dual-Link DVI Cable

DVI-DL (dual-link) adds 6 pins to the middle of the array, and this allows greater data transmission, giving a reliable 2560×1600 display at 60 Hz.